toc

What is it ?

this is yet another makefile tool.

Starting a new project, that involves yml descriptions, LaTeX, lilypond and tikz code generation, unknown list of files, usage of build tools such as lualatex or other custom tools (strudel_of_lilypond), … make was the logical tool to use to build the outputs, but it turned to be more a problem than a solution.

Having a long experience with make ( and all replacements, such as cmake, omake, and all kinds of proprietary solutions ), some issues are recurrent, and this tool intends to solve them.

Yamake tries to solve this issues :

- mount allows you to build in a separate sandbox, and leave your source directory clean

- impossible recursion, and the famous article recursive Makefile considered harmful

- how to deal with code generators ?. How do you write a Makefile if you don’t know which intermediate or final artefacts to build ?

- how to break unneeded build chain ?. In the chain

a -> b -> c, if after modifyingaand rebuildingb, b is the same, why rebuildc? - how to manage non explicit dependencies, aka scan ?.

- how to check make capture error ?

- how to generate a Makefile ( instead of writing it ) ?

- how to nicely get logs of artefact builds ( and not having a cluttered stdout with hundred of lines )

- how to get a build report

typo error

Consider this Makefile :

x.o : x.c

gcc -o blah.o $<

if file x.c is correct, running make will not yield an error, though the target x.o will not be built. For manually captured big makefiles, this is a real issue that produces bugs

logs

When you build a huge projects, you will have only two logs, the stdout and stderr, and can be thousands of line log. On top of that, if the build is parallelized, log lines are untangled and just impossible to read.

mount

A nice feature of omake, not sure there is a make equivalent, is the vmount : all source files are copied to another directory, called sandbox, and everybuild takes place there.

What is nice is that

- the source directory is not polluted with built artefacts

- you can just delete your sandbox

recursive makefile condidered harmful

this is a well known problem, you can follow this link : recursive makefile considered harmful

this a quote of the article :

Caution

- It is very hard to get the order of the recursion into the subdirectories correct. This order is very unstable and frequently needs to be manually ‘‘tweaked.’’ Increasing the number of directories, or increasing the depth in the directory tree, cause this order to be increasingly unstable. - It is often necessary to do more than one pass over the subdirectories to build the whole system. This, naturally, leads to extended build times. - Because the builds take so long, some dependency information is omitted, otherwise development builds take unreasonable lengths of time, and the developers are unproductive. This usually leads to things not being updated when they need to be, requiring frequent “clean” builds from scratch, to ensure everything has actually been built. - Because inter-directory dependencies are either omitted or too hard to express, the Makefiles are often written to build too much to ensure that nothing is left out. - The inaccuracy of the dependencies, or the simple lack of dependencies, can result in a product which is incapable of building cleanly, requiring the build process to be carefully watched by a human. - Related to the above, some projects are incapable of taking advantage of various “parallel make” impementations, because the build does patently silly things.

If you worked on big projects, you know what this is, especially the inter-directory dependencies.

Yamake solves this issues by not scanning directories, artefacts form a DAG regardless of where files stay.

timestamp

Make uses the timestamp of a target to determine if it needs to be rebuilt or not. Let’s consider this scenario :

a.o: a.c a.h

gcc -c $@ -o $<

libproject.a : a.o b.o c.o

ar rcs $@ $^

if you add a comment to a.h, a.o gets rebuild, but because you only added a comment, a.o is unchanged after the rebuild. Its timestamp changes though,

and this will trigger the useless link of libproject.a, and all other artefacts down the build path.

If you have a code generator, this is even worse, as many files get involved.

Yamake solves this issue by considering the digest of the files, and not their timestamp. In our example, as a.o has same digest after the build, the rebuild is not propagated.

expand

usual cases, all files are known

Standard makefiles define rules, eg :

%.o : %.c

gcc -c $< -o $@

libproject.a : a.o b.o c.o

ar rcs $@ $^

which build the graph :

--- --- flowchart TD a.c --> a.o --> libproject.a b.c --> b.o --> libproject.a c.c --> c.o --> libproject.a

now we have code generators, lists of files are unknown

Now, image that the list of files is unknown, because we have a code generator cg, that takes an input file config.yml.

Our Makefile would be the impossible :

%.o : %.c

gcc -c $< -o $@

unknown list of files : config.yml

cg $^

libproject.a : unknown list of files

ar rcs $@ $^

Usual solution with make would be to have a directory or filename pattern for the generator, and use a wildcard.

yamake expand

Yamake has another approach, names expansion. Our initial known graph has nodes main.c, main.o, app, libapp.a and config.yml.

We have a code generator that will generate cfiles from config.yml, but we don’t have that list.

Yamake allows nodes to have an expand method. In our example, the expansion will create 6 nodes : a.c, a.o, … and the edges, this is shown in orange.

Frame 1 / 2

--- title: null animate: null animate-yml-file: expand2.yml --- flowchart TD config.yml econfa@--> ac ac eacao@--> ao ao ealib@--> libapp.a config.yml econfb@--> bc bc ebcbo@--> bo bo eblib@--> libapp.a config.yml econfc@--> cc cc eccco@--> co co eclib@--> libapp.a ac(a.c) ; bc(b.c) ; cc(c.c) ; ao(a.o) ; bo(b.o) ; co(c.o) ; main.c --> main.o --> app libapp.a --> app classDef class_active_node stroke-width:1px,color:black,stroke:black ; classDef class_expanded_node stroke-width:1px,color:black,stroke:orange ; classDef class_hidden_node stroke-width:1px,color:white,stroke:white,stroke-dasharray: 9,5,stroke-dashoffset: 900 ; classDef class_active_edge stroke-width:3px,color:orange,stroke:orange; classDef class_hidden_edge stroke-width:1px,stroke:white ; classDef class_expanded_edge stroke-width:3px,stroke:orange ; classDef class_animate_edge stroke-dasharray: 9,5,stroke-dashoffset: 900,animation: dash 25s linear infinite,color black; class ac class_hidden_node; class bc class_hidden_node; class cc class_hidden_node; class ao class_hidden_node; class bo class_hidden_node; class co class_hidden_node; class econfa class_hidden_edge; class econfb class_hidden_edge; class econfc class_hidden_edge; class eacao class_hidden_edge; class ebcbo class_hidden_edge; class eccco class_hidden_edge; class ealib class_hidden_edge; class eblib class_hidden_edge; class eclib class_hidden_edge;

initial graph

Frame 2 / 2

--- title: null animate: null animate-yml-file: expand2.yml --- flowchart TD config.yml econfa@--> ac ac eacao@--> ao ao ealib@--> libapp.a config.yml econfb@--> bc bc ebcbo@--> bo bo eblib@--> libapp.a config.yml econfc@--> cc cc eccco@--> co co eclib@--> libapp.a ac(a.c) ; bc(b.c) ; cc(c.c) ; ao(a.o) ; bo(b.o) ; co(c.o) ; main.c --> main.o --> app libapp.a --> app classDef class_active_node stroke-width:1px,color:black,stroke:black ; classDef class_expanded_node stroke-width:1px,color:black,stroke:orange ; classDef class_hidden_node stroke-width:1px,color:white,stroke:white,stroke-dasharray: 9,5,stroke-dashoffset: 900 ; classDef class_active_edge stroke-width:3px,color:orange,stroke:orange; classDef class_hidden_edge stroke-width:1px,stroke:white ; classDef class_expanded_edge stroke-width:3px,stroke:orange ; classDef class_animate_edge stroke-dasharray: 9,5,stroke-dashoffset: 900,animation: dash 25s linear infinite,color black; class ac class_expanded_node; class bc class_expanded_node; class cc class_expanded_node; class ao class_expanded_node; class bo class_expanded_node; class co class_expanded_node; class econfa class_expanded_edge; class econfb class_expanded_edge; class econfc class_expanded_edge; class eacao class_active_edge; class ebcbo class_active_edge; class eccco class_active_edge; class ealib class_expanded_edge; class eblib class_expanded_edge; class eclib class_expanded_edge;

after expansion

scan

Scanning is the action of adding dependencies by scanning a source file. A typical example is, when you have C source code, scan the .c file

to find the #include directives and add these files as dependencies. Same with C++, and, for my project, latex files, tikz files, lilypond files,…

Make has makedepend, omake has a scanner, here you write the scanner as rust code, as a trait implementation.

logs

With make, you get the results of the build on the stdout. This is really difficult for reading, if you have a compile error, especially in C++ with templates, you may get hundreds of error lines. If your build is multithreaded, this is even worse.

Yamake eases this process.

When building a node, yamake creates two files, one for stdout and one for stderr. They are placed in the sandbox directory.

the file <sandbox>/make-report.yml is generated at the end of each build, it provides informations about the build. You can get the path of the logs for a given node,

eg :

yq '.nodes[] | select(.pathbuf == "project_expand/main.o") | .stdout_path' sandbox/make-report.yml

will return the path of the stdout captured when building this node.

You could use

for stderr in $(yq -r '.nodes[] | select (.status=="BuildFailed").stderr_path ' sandbox/make-report.yml) ; do

echo $stderr

if [[ $stderr ]] ; then

vim $stderr

fi

done

Makefile

yamake is a library, that lets you, after you have implemented your own rules ( by implementing the GNode trait ), create an executable that will build your project.

make uses its own syntax, for you to write a Makefile. Here you need to construct the graph yourself, either :

- in the main function, like in the documentation examples

- you may write your own yml file, that describes your project, and you build your graph from it

- you could also scan your source tree, and construct nodes as your discover files

capture error

this is a classic error that happens when you don’t use automatic rules :

a.o : a.c

gcc -c -o b.o a.c

main.o : main.c

gcc -c -o $@ $^

app : a.o main.o

gcc -o $@ $^

when running make, you won’t get an error because you will compile correctly, but you will get b.o instead of expected a.o. In the next

steps of the build, linking app will fail because a.o does not exist, or, worse, it was not updated and you have a wrong version. This error is hard to spot.

after build, yamake checks that the target file exists.

example

Our example is a C project that we want to compile. You will find the sources of the project in sources of the C project.

To build this project, instead of writing a Makefile, we write our own tool, using the yamake crate. You will find the sources of this tool in sources of the demo tool

running the example

cargo run --example project_C -- -s demo_projects -b sandbox

This will:

- Copy source files from

demo_projectstosandbox - Scan C files for

#includedirectives and add dependency edges - Build all targets in parallel where possible

node statuses

After a build, each node has one of these statuses:

- Initial: Not processed yet

- Mounted: Source file copied to sandbox

- MountedFailed: Failed to copy source

- Running: Build in progress

- Build: Successfully built

- BuildFailed: Build failed

- AncestorFailed: Skipped because a dependency failed

error handling

When a build fails:

- The failing node gets

BuildFailedstatus - All dependent nodes get

AncestorFailedstatus make()returnsfalse

How to use it

The workflow is :

- instanciate the graph

- use exiting rules

- add the nodes

- add the egdes

- for debugging purposes, plot the build graph

- call the make function

In the following pages, we illustrate the usage with the build of program written in C. We will use rules that already exists, to add your own rules to to section add your own rules

instanciate the graph

the code examples are taken from examples/project_C/main.rs.

first instanciate a instance of M::G ( cli is the command line arguments, here we pass srcdir and sandbox )

#![allow(unused)]

fn main() {

use argh::FromArgs;

use log::info;

use std::path::PathBuf;

use yamake::c_nodes::{AFile, CFile, HFile, OFile, XFile};

use yamake::model::G;

}#![allow(unused)]

fn main() {

let mut g = G::new(srcdir, sandbox);

}use existing rules

You need to have exiting rules to construct a graph. In this example, we use existing rules for building a program written in C, and using the gcc suite.

see this section for adding your own build rules.

#![allow(unused)]

fn main() {

// Using existing C build rules from yamake::c_nodes:

// - CFile: C source files (.c)

// - HFile: Header files (.h)

// - OFile: Object files (.o)

// - AFile: Static library files (.a)

// - XFile: Executable files

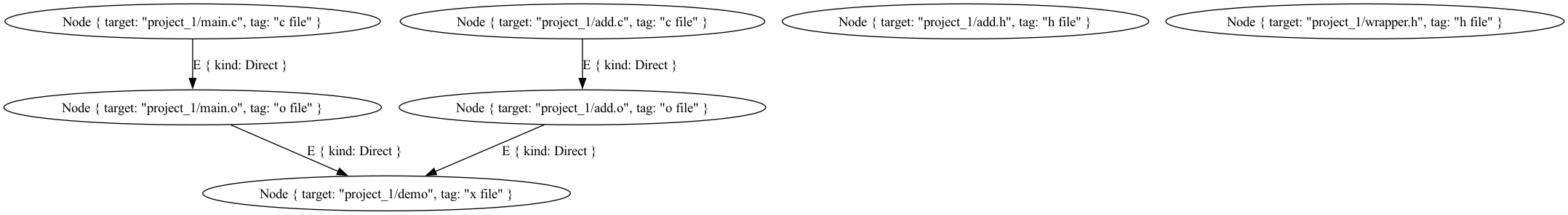

}add nodes

here we assume that we already have structs that implements M::Node, in our example we have 3 implementations :

- CFile for .c files

- HFile for .h files

- OFile for object files

- XFile for executable files

see section …

in our example, for the demo add the nodes manually.

#![allow(unused)]

fn main() {

let main_c = g.add_root_node(CFile::new("project_C/main.c")).unwrap();

let main_o = g

.add_node(OFile::new("project_C/main.o", vec![], vec![]))

.unwrap();

let add_c = g.add_root_node(CFile::new("project_C/add.c")).unwrap();

let add_o = g

.add_node(OFile::new(

"project_C/add.o",

vec![],

vec!["-DYYY_defined".to_string()],

))

.unwrap();

let _add_h = g.add_root_node(HFile::new("project_C/add.h")).unwrap();

let _wrapper_h = g.add_root_node(HFile::new("project_C/wrapper.h")).unwrap();

let project_a = g.add_node(AFile::new("project_C/libproject.a")).unwrap();

let app = g.add_node(XFile::new("project_C/app")).unwrap();

}in a real project

in a real project, you might want to :

- scan the srcdir to find the .c and .h files (what you do manually in a Makefile )

- for each .c, create a .o node ( the implicit rule of a Makefile )

- as in an real Makefile, you would need to explicit which objects and libraries you need to build, with their sources

use the sandbox

here for instance, use the sandbox as the search path for the header ( the -I ) option of compilation. Always refer to paths in the sandbox and not in the srcdir, because when using built artefacts it will fail when using the srcdir

Caution

everything, except the mount, happens in the sandbox. All paths are relative, and understood from the sandbox.

paths are unique

Caution

paths are unique : two nodes cannot have the same path, that would yield an error

add edges

here we draw the edges between the nodes.

we explicit that an object file (.o) is the result of the compilation of a source file (.c), and that when linking together .o files you produce an executable.

Here, we specify the graph, which will allow yamake to process the nodes in the right order.

What is actually performed to build the nodes (linking, compiling,…) is in the implementation of Ofile and Xfile, that implements yamake::model::GNode trait.

#![allow(unused)]

fn main() {

g.add_edge(main_c, main_o);

g.add_edge(main_o, app);

g.add_edge(add_c, add_o);

g.add_edge(add_o, project_a);

g.add_edge(project_a, app);

}the scan will add new edges.

plot graph

n1n1 --> n3

n4 --> n5

n5 --> n6

n6 --> n3

n2 -.-> n1

n5 ==> n7

n5 ==> n8

n7 ==> n9

n9 ==> n6

n5 ==> n10

n5 ==> n11

n10 ==> n12

n12 ==> n6

n5 ==> n13

n5 ==> n14

n13 ==> n15

n15 ==> n6

n5 ==> n16

n5 ==> n17

n16 ==> n18

n18 ==> n6

n5 ==> n19

n5 ==> n20

n19 ==> n21

n21 ==> n6

n5 ==> n22

n22 -.-> n1

n8 -.-> n1

n11 -.-> n1

n14 -.-> n1

n17 -.-> n1

n20 -.-> n1

style n0 fill:#ADD8E6,stroke:#4169E1

style n1 fill:#E6E6FA,stroke:#9370DB

style n2 fill:#ADD8E6,stroke:#4169E1

style n3 fill:#E6E6FA,stroke:#9370DB

style n4 fill:#ADD8E6,stroke:#4169E1

style n5 fill:#E6E6FA,stroke:#9370DB

style n6 fill:#E6E6FA,stroke:#9370DB

style n7 fill:#E6E6FA,stroke:#9370DB

style n8 fill:#E6E6FA,stroke:#9370DB

style n9 fill:#E6E6FA,stroke:#9370DB

style n10 fill:#E6E6FA,stroke:#9370DB

style n11 fill:#E6E6FA,stroke:#9370DB

style n12 fill:#E6E6FA,stroke:#9370DB

style n13 fill:#E6E6FA,stroke:#9370DB

style n14 fill:#E6E6FA,stroke:#9370DB

style n15 fill:#E6E6FA,stroke:#9370DB

style n16 fill:#E6E6FA,stroke:#9370DB

style n17 fill:#E6E6FA,stroke:#9370DB

style n18 fill:#E6E6FA,stroke:#9370DB

style n19 fill:#E6E6FA,stroke:#9370DB

style n20 fill:#E6E6FA,stroke:#9370DB

style n21 fill:#E6E6FA,stroke:#9370DB

style n22 fill:#E6E6FA,stroke:#9370DB

linkStyle 0 stroke:#333,stroke-width:2px

linkStyle 1 stroke:#333,stroke-width:2px

linkStyle 2 stroke:#333,stroke-width:2px

linkStyle 3 stroke:#333,stroke-width:2px

linkStyle 4 stroke:#333,stroke-width:2px

linkStyle 5 stroke:#2196F3,stroke-width:2px

linkStyle 6 stroke:#FF9800,stroke-width:2px

linkStyle 7 stroke:#FF9800,stroke-width:2px

linkStyle 8 stroke:#FF9800,stroke-width:2px

linkStyle 9 stroke:#FF9800,stroke-width:2px

linkStyle 10 stroke:#FF9800,stroke-width:2px

linkStyle 11 stroke:#FF9800,stroke-width:2px

linkStyle 12 stroke:#FF9800,stroke-width:2px

linkStyle 13 stroke:#FF9800,stroke-width:2px

linkStyle 14 stroke:#FF9800,stroke-width:2px

linkStyle 15 stroke:#FF9800,stroke-width:2px

linkStyle 16 stroke:#FF9800,stroke-width:2px

linkStyle 17 stroke:#FF9800,stroke-width:2px

linkStyle 18 stroke:#FF9800,stroke-width:2px

linkStyle 19 stroke:#FF9800,stroke-width:2px

linkStyle 20 stroke:#FF9800,stroke-width:2px

linkStyle 21 stroke:#FF9800,stroke-width:2px

linkStyle 22 stroke:#FF9800,stroke-width:2px

linkStyle 23 stroke:#FF9800,stroke-width:2px

linkStyle 24 stroke:#FF9800,stroke-width:2px

linkStyle 25 stroke:#FF9800,stroke-width:2px

linkStyle 26 stroke:#FF9800,stroke-width:2px

linkStyle 27 stroke:#2196F3,stroke-width:2px

linkStyle 28 stroke:#2196F3,stroke-width:2px

linkStyle 29 stroke:#2196F3,stroke-width:2px

linkStyle 30 stroke:#2196F3,stroke-width:2px

linkStyle 31 stroke:#2196F3,stroke-width:2px

linkStyle 32 stroke:#2196F3,stroke-width:2px

we notice that the graph is not connected, the scanner will add edges. Notice that all the edges are labeled `Explicit`, which means a direct

dependency that was explicited. Explicit also means that you need it, it is part of the rule that will build the output artefact.

scan

The scan is performed automatically during make(). It adds edges to the graph based on #include directives found in source files.

After the scan, we will have new edges:

- main.o depends on add.h and wrapper.h (via wrapper.h)

- add.o depends on add.h

The edges that were added by the scan are labeled Scanned.

Scan completion

The scan() method returns a tuple (bool, Vec<PathBuf>):

- First element:

trueif scan is complete (all included files found),falseotherwise - Second element: List of discovered dependencies (header paths from

#includedirectives)

When a scan is incomplete (missing files), the node is marked ScanIncomplete if there are other unbuilt nodes that could generate the missing files via expand().

Interaction with expand

When using expand() to generate code dynamically:

- A node scans its source files and finds includes that don’t exist yet

- The node is marked

ScanIncompleteand waits - Another node runs

expand()and creates the missing files - On the next iteration, the scan succeeds and the node can build

This ensures nodes always build with up-to-date generated headers.

Orphan file detection

If a scanned file exists in the sandbox but has no corresponding graph node, it was likely created by a previous expand() run. The build system waits for expand to run again in case the file needs updating.

This is critical for incremental builds where source changes should regenerate derived files.

Scan is done in the sandbox: it only adds edges between existing nodes, it does not add nodes.

The scan reads the source files and tries to find dependencies. So in our example, main.o depends on main.c, and therefore this file is scanned. But if add.h was forgotten in the graph, this dependency will be ignored.

The scan is used to determine, using file digests, if a successor node needs to be rebuilt. So if the scanner is not correct, the build will be fine, but the feature rebuild only what is necessary will not work correctly.

make

Running the make command:

- Resets all node statuses to

Initial - Mounts root nodes (copies source files to sandbox)

- Compares source file digests to detect changes

- Scans for dependencies (adds edges for

#includedirectives) - Traverses the graph and builds output artifacts in parallel where possible

- Saves build results to

make-report.yml

#![allow(unused)]

fn main() {

// make() will:

// 1. Mount root nodes (copy source files to sandbox)

// 2. Scan for dependencies (adds edges for #include directives)

// 3. Build all nodes in dependency order (parallel where possible)

let success = g.make();

info!("Build {}", if success { "succeeded" } else { "failed" });

}Build output

After the build, <sandbox>/make-report.yml contains detailed information for each node:

- pathbuf: project_C/main.c

status: MountedNotChanged

digest: 5ebac2a26d27840f79382655e1956b0fc639cbdca5643abaf746f6e557ad39b8

absolute_path: /path/to/sandbox/project_C/main.c

stdout_path: null

stderr_path: null

predecessors: []

- pathbuf: project_C/main.o

status: BuildNotRequired

digest: ec1a9daf9c963db29ba4557660e3967a6eeb38dab5372e459d3a1be446c38417

absolute_path: /path/to/sandbox/project_C/main.o

stdout_path: /path/to/sandbox/logs/project_C/main.o.stdout

stderr_path: /path/to/sandbox/logs/project_C/main.o.stderr

predecessors:

- pathbuf: project_C/main.c

status: MountedNotChanged

- pathbuf: project_C/wrapper.h

status: MountedNotChanged

Build logs

Build commands capture stdout and stderr to log files in <sandbox>/logs/:

<sandbox>/logs/<node>.stdout- standard output<sandbox>/logs/<node>.stderr- standard error

The first line of each log file contains the command that was executed:

"gcc" "-c" "-I" "sandbox" "-o" "sandbox/project_C/main.o" "sandbox/project_C/main.c"

Incremental builds

The make() function can be called multiple times on the same graph. On subsequent runs:

- Source files with unchanged digests get status

MountedNotChanged - Built files that don’t need rebuilding get status

BuildNotRequired - Only files with changed inputs or missing outputs are rebuilt

report

The make() method returns a boolean indicating success or failure. You can also inspect the nodes_status map to see the status of each node after the build.

the status types

#![allow(unused)]

fn main() {

#[derive(Debug, Clone, Copy, PartialEq, Eq, Hash, Serialize, Deserialize)]

pub enum GNodeStatus {

Initial,

MountedChanged,

MountedNotChanged,

MountedFailed,

ScanIncomplete,

Running,

BuildSuccess,

BuildNotChanged,

BuildNotRequired,

BuildFailed,

AncestorFailed,

}

}Status meanings:

- Initial: Node has not been processed yet

- Mounted: Root node source file has been copied to sandbox

- MountedFailed: Failed to copy source file to sandbox

- Running: Node build is currently in progress

- Build: Node was built successfully

- BuildFailed: Node build failed

- AncestorFailed: A predecessor node failed, so this node was skipped

How to add rules

to add rules, you need to define the nodes of your build graph.

root nodes

a root node implements the trait GRootNode

#![allow(unused)]

fn main() {

/// A root node in the build graph representing a source file.

///

/// Root nodes are inputs to the build system (e.g., source files, configuration files)

/// that do not need to be built themselves. They automatically implement [`GNode`] with

/// a no-op `build` that returns `true`.

///

/// # Examples

///

/// ## Basic GRootNode

///

/// A simple root node representing a source file:

///

/// ```

/// use std::path::PathBuf;

/// use yamake::model::GRootNode;

///

/// struct SourceFile {

/// name: String,

/// }

///

/// impl GRootNode for SourceFile {

/// fn tag(&self) -> String {

/// "SourceFile".to_string()

/// }

///

/// fn pathbuf(&self) -> PathBuf {

/// PathBuf::from(&self.name)

/// }

/// }

///

/// let source = SourceFile { name: "main.c".to_string() };

/// assert_eq!(source.tag(), "SourceFile");

/// assert_eq!(source.pathbuf(), PathBuf::from("main.c"));

/// ```

///

/// ## GRootNode with expand

///

/// A root node that generates additional nodes and edges when expanded:

///

/// ```

/// use std::path::{Path, PathBuf};

/// use yamake::model::{Edge, ExpandResult, GNode, GRootNode};

///

/// /// A generated node produced by expand.

/// struct GeneratedNode {

/// name: String,

/// }

///

/// impl GNode for GeneratedNode {

/// fn tag(&self) -> String {

/// "GeneratedNode".to_string()

/// }

/// fn pathbuf(&self) -> PathBuf {

/// PathBuf::from(&self.name)

/// }

/// fn build(&self, _sandbox: &Path, _predecessors: &[&(dyn GNode + Send + Sync)]) -> bool {

/// true

/// }

/// }

///

/// /// A root node that expands to generate additional nodes.

/// struct ConfigFile {

/// name: String,

/// }

///

/// impl GRootNode for ConfigFile {

/// fn tag(&self) -> String {

/// "ConfigFile".to_string()

/// }

///

/// fn pathbuf(&self) -> PathBuf {

/// PathBuf::from(&self.name)

/// }

///

/// fn expand(

/// &self,

/// _sandbox: &Path,

/// _predecessors: &[&(dyn GNode + Send + Sync)],

/// ) -> ExpandResult {

/// // Generate nodes based on configuration

/// let node1 = GeneratedNode { name: "generated/file1.o".to_string() };

/// let node2 = GeneratedNode { name: "generated/file2.o".to_string() };

///

/// let nodes: Vec<Box<dyn GNode + Send + Sync>> = vec![

/// Box::new(node1),

/// Box::new(node2),

/// ];

///

/// // No edges in this example

/// let edges: Vec<Edge> = vec![];

///

/// Ok((nodes, edges))

/// }

/// }

///

/// let config = ConfigFile { name: "config.yml".to_string() };

/// let sandbox = PathBuf::from("/tmp/sandbox");

/// let predecessors: Vec<&(dyn GNode + Send + Sync)> = vec![];

///

/// // Use GRootNode::expand to disambiguate from the blanket GNode impl

/// let (nodes, edges) = GRootNode::expand(&config, &sandbox, &predecessors).unwrap();

/// assert_eq!(nodes.len(), 2);

/// assert_eq!(nodes[0].pathbuf(), PathBuf::from("generated/file1.o"));

/// assert_eq!(nodes[1].pathbuf(), PathBuf::from("generated/file2.o"));

/// ```

pub trait GRootNode {

/// Expands this node to generate additional nodes and edges.

///

/// Called after the node is built to dynamically add new nodes and edges

/// to the build graph. This is useful for nodes that generate code or

/// configuration that determines additional build targets.

///

/// Returns `Ok((nodes_to_add, edges_to_add))` on success, or an `ExpandError` on failure.

fn expand(

&self,

_sandbox: &Path,

_predecessors: &[&(dyn GNode + Send + Sync)],

) -> ExpandResult {

Ok((Vec::new(), Vec::new()))

}

/// Returns a tag identifying the type of this node.

fn tag(&self) -> String;

/// Returns the path associated with this node.

fn pathbuf(&self) -> PathBuf;

}

}built nodes

the nodes which are not root have predecessors. They must implement trait yamake::GNode

#![allow(unused)]

fn main() {

pub trait GNode: Send + Sync {

fn build(&self, _sandbox: &Path, _predecessors: &[&(dyn GNode + Send + Sync)]) -> bool {

panic!("build not implemented for {}", self.pathbuf().display())

}

fn scan(

&self,

_sandbox: &Path,

_predecessors: &[&(dyn GNode + Send + Sync)],

) -> (bool, Vec<PathBuf>) {

(true, Vec::new())

}

fn expand(

&self,

_sandbox: &Path,

_predecessors: &[&(dyn GNode + Send + Sync)],

) -> ExpandResult {

Ok((Vec::new(), Vec::new()))

}

fn tag(&self) -> String;

fn pathbuf(&self) -> PathBuf;

}

}tag

scan

build

Graph life

- Node life cycle

- node target

- node statuses

- node type

- digest

- source node

- incremental builds

- parallel builds

Node life cycle

node target

The target of a node is a file in the sandbox. node <=> target is a 1-1 relation.

node statuses

Each node has a status that tracks its state during the build process:

| Status | Description |

|---|---|

Initial | Node has not been processed yet |

MountedChanged | Source file mounted, digest changed since last build |

MountedNotChanged | Source file mounted, digest unchanged |

MountedFailed | Failed to mount source file |

ScanIncomplete | Waiting for dependencies to be generated (e.g., by expand) |

Running | Node is currently being built |

BuildSuccess | Build completed successfully with changed output |

BuildNotChanged | Build completed successfully but output unchanged |

BuildNotRequired | Build skipped (predecessors unchanged and output digest matches) |

BuildFailed | Build failed |

AncestorFailed | Skipped because a predecessor failed |

node type

---

title: node life cycle

---

flowchart

start@{ shape: f-circ}

start --> decision_has_preds

decision_has_preds{node

has preds ?}:::choice

decision_file_exists_in_sources{file

in sources ?}:::choice

decision_digest_changed{

digest

changed ?

}:::choice

decision_all_preds_ok{

all preds

ok ?

}:::choice

decision_some_preds_changed{

some preds

changed ?

}:::choice

decision_build_success{

build

success ?

}:::choice

decision_output_digest{

output

digest

changed ?

}:::choice

missing_source[MountedFailed]

decision_has_preds -- no --> decision_file_exists_in_sources

decision_file_exists_in_sources -- yes --> mount:::action

decision_file_exists_in_sources -- no --> missing_source:::ko

decision_has_preds -- yes --> decision_all_preds_ok

mount --> decision_digest_changed

decision_digest_changed -- yes --> mounted_changed[MountedChanged]:::changed

decision_digest_changed -- no --> mounted_not_changed[MountedNotChanged]:::unchanged

%%% build branch

ancestor_failed[AncestorFailed]

scan_incomplete[ScanIncomplete]

decision_all_preds_ok -- no -----> ancestor_failed:::ko

decision_all_preds_ok -- yes --> decision_scan_complete{scan

complete ?}:::choice

decision_scan_complete -- no --> scan_incomplete:::waiting

decision_scan_complete -- yes --> decision_some_preds_changed

scan_incomplete --> decision_progress_made{progress

made ?}:::choice

decision_progress_made -- yes --> decision_scan_complete

decision_progress_made -- no --> decision_some_preds_changed

build:::action

decision_some_preds_changed -- yes --> build

decision_some_preds_changed -- no --> decision_output_digest

decision_output_digest -- yes --> build

decision_output_digest -- no --> build_not_required[BuildNotRequired]:::unchanged

build --> decision_build_success

decision_build_success -- yes --> decision_final_digest{output

digest

changed ?}:::choice

decision_build_success -- no --> build_failed[BuildFailed]:::ko

decision_final_digest -- yes --> build_success[BuildSuccess]:::changed

decision_final_digest -- no --> build_not_changed[BuildNotChanged]:::unchanged

classDef ko fill:#f00,color:white,font-weight:bold,stroke-width:2px,stroke:yellow

classDef changed fill:#0ff,color:black,font-weight:bold,stroke-width:2px,stroke:yellow

classDef unchanged fill:#0f0,color:black,font-weight:bold,stroke-width:2px,stroke:yellow

classDef waiting fill:#FFD700,color:black,font-weight:bold,stroke-width:2px,stroke:yellow

classDef action fill:#FF8C00,color:black,font-weight:bold,stroke-width:2px,stroke:black,shape:bolt

classDef choice fill:lavender,color:black,font-weight:bold,stroke-width:2px,stroke:red,shape: circle

digest

Digests (SHA256 hashes) of nodes are stored in make-report.yml in the sandbox. The file contains an array of OutputInfo entries:

- pathbuf: project_C/main.c

status: MountedNotChanged

digest: 5ebac2a26d27840f79382655e1956b0fc639cbdca5643abaf746f6e557ad39b8

absolute_path: /path/to/sandbox/project_C/main.c

stdout_path: null

stderr_path: null

predecessors: []

- pathbuf: project_C/main.o

status: BuildNotRequired

digest: ec1a9daf9c963db29ba4557660e3967a6eeb38dab5372e459d3a1be446c38417

absolute_path: /path/to/sandbox/project_C/main.o

stdout_path: /path/to/sandbox/logs/project_C/main.o.stdout

stderr_path: /path/to/sandbox/logs/project_C/main.o.stderr

predecessors:

- pathbuf: project_C/main.c

status: MountedNotChanged

Each entry includes:

pathbuf: Relative path to the filestatus: Final node status after builddigest: SHA256 hash of file contentsabsolute_path: Absolute path to the output filestdout_path/stderr_path: Paths to build log files (null for source files)predecessors: List of direct predecessors with their status

On subsequent builds, digests are compared to determine if files have changed:

- Source files: Compared before mounting to set

MountedChangedorMountedNotChanged - Built files: Compared after build to set

BuildSuccessorBuildNotRequired

source node

A source node has no predecessor. It is a file in the source directory, and there is no rule to build it. It is mounted (copied from source directory to sandbox). When mounted, its digest is compared to the previous digest stored in make-report.yml.

incremental builds

The build system supports incremental builds by tracking:

- Source file digests: Detect when source files change

- Output file digests: Avoid rebuilding when output would be identical

- Predecessor statuses: Skip builds when all predecessors are unchanged

When all predecessors have status MountedNotChanged, BuildNotChanged, or BuildNotRequired, the output file is checked:

- If it exists and its digest matches the previous build, status is set to

BuildNotRequired - Otherwise, the build runs and the result is compared:

BuildSuccessif output digest changedBuildNotChangedif output digest is unchanged (e.g., adding a comment to source)

parallel builds

Builds are executed concurrently using Rayon. Nodes at the same dependency level can be built in parallel, while respecting the dependency graph.

The build phase operates in three stages:

- Categorization (sequential): Determine which nodes are ready to build

- Mark

AncestorFailedfor nodes with failed predecessors - Mark

BuildNotRequiredfor nodes with unchanged predecessors and matching output digest - Collect nodes ready to build (all predecessors ready, no failures)

- Mark

- Build (parallel): Execute builds concurrently using

rayon::par_iter() - Status update (sequential): Apply build results to node statuses

This approach maximizes parallelism while ensuring correct dependency ordering.

Generated Nodes

first

first we have a description of messages in different languages, in a yaml file.

we have a code generator, written in rust. The code source is part of the project

This will generate C files, and we have our main.c that will print some messages

file languages.yml

- language: English

helloworld: Hello, World!

- language: French

helloworld: Bonjour, le Monde!

- language: Spanish

helloworld: Hola, Mundo!

- language: German

helloworld: Hallo, Welt!

- language: Japanese

helloworld: こんにちは、世界!

from the languages.yml file C files and H files will be generated, but for now we don’t have this list so we cannot build the build graph,

so our graph looks like this but is incomplete

we have

greetings.ymlandmain.care source files, and will be mountedmain.owill be compiled frommain.c( we miss header files )- executable file

demowill be the product of linkingliblanguages.aandmain.o - and ….

liblanguages.ais a library that will be built from C files, generated from greetings.yml, we miss these files yet

the Green arrow shows the dependency, it will trigger the expansion.

---

---

flowchart

greetings_yml([greetings.yml]):::source

main_c([main.c]):::source

main_o{{main.o}}:::ofile

liblanguages_a{{liblanguages.a}}:::ofile

demo{{demo}}:::ofile

main_c ec1@--> main_o

liblanguages_a ec3@--> demo

main_o ec4@--> demo

greetings_yml ex1@--> liblanguages_a

%% classDef source fill:#f96

classDef generated fill:#bbf,stroke:#f66,stroke-width:2px,color:#fff,stroke-dasharray: 5 5

classDef ofile fill:#03f,color:#f66

classDef e_htosource stroke:#aaa,stroke-width:0.7x ,stroke-dasharray: 10,5;

class es1,es2,es3,es4,es5,es6,es7,es8,es9,es10 e_htosource;

classDef e_generate stroke:#f00,stroke-width:1px;

class eg1,eg2,eg3,eg4,eg5,eg6,eg7 e_generate;

classDef e_compile stroke:#00f,stroke-width:1px;

class ec1,ec2,ec3,ec4,ec5,ec6,ec7 e_compile;

classDef e_expand stroke:#3f3,stroke-width:3px,color:red;

class ex1,ex2,ex3,ex4,ex5,ex6,ex7 e_expand;

one solution

one solution would be to call the code generator, get the list of generated files, and construct our build graph. this would work here, but not in a more general situation, where the generated files will also be used to build other tools, that will also generate other files

chosen solution

for each node, we have an expand trait. It usually does nothing, but, in our case, expand will :

- generate the files in the sandbox

- add these files to the graph

- add edges

the final graph

we have the nodes

- in orange the sources

- in lavender with green dotted border, expanded nodes, and file is generated in sandbox

- in blue with green dotted border, expanded nodes, these are

.ofiles, they will be built later - in blue the compiled files

and the edges :

- in red, the generation of code

- in green the expansion

- in blue, the compilation and link of C source code

- in dotted, scanning of

#includetransitive directives

Frame 1 / 3

---

title: null

animate: null

animate-yml-file: expand.yml

---

flowchart

greetings_yml([greetings.yml]):::source

greetings_json([greetings.json]):::json

main_c([main.c]):::source

subgraph expanded nodes

%%subgraph generated files

languages_h{{languages.h}}:::generated

english_h{{english.h}}:::generated

german_h{{german.h}}:::generated

french_h{{french.h}}:::generated

english_c{{english.c}}:::generated

german_c{{german.c}}:::generated

french_c{{french.c}}:::generated

%%end

%%subgraph expanded files

english_o{{english.o}}:::oexpanded

german_o{{german.o}}:::oexpanded

french_o{{french.o}}:::oexpanded

%%end

end

main_o{{main.o}}:::ofile

liblanguages_a{{liblanguages.a}}:::ofile

demo{{demo}}:::ofile

greetings_json eg1@--> english_h

greetings_json eg2@--> german_h

greetings_json eg3@--> french_h

greetings_json eg4@--> english_c

greetings_json eg5@--> german_c

greetings_json eg6@--> french_c

greetings_json eg7@--> languages_h

languages_h es8@--> main_o

english_h es1@--> english_o

english_h es2@--> main_o

german_h es3@--> german_o

german_h es4@--> main_o

french_h es5@--> french_o

french_h es6@--> main_o

%% languages_h es7@--> liblanguages_a

main_c ec1@--> main_o

%% languages_c ec2@--> languages_o

liblanguages_a ec3@--> demo

main_o ec4@--> demo

english_c ex20@--> english_o

german_c ex21@--> german_o

french_c ex22@--> french_o

english_o ex1@--> liblanguages_a

german_o ex2@--> liblanguages_a

french_o ex3@--> liblanguages_a

greetings_yml eg8@--> greetings_json

%% classDef source fill:#f96

%% classDef generated fill:#bbf,stroke:#3f3,stroke-width:5px,color:#fff,stroke-dasharray: 5 5

%% classDef oexpanded fill:#03f,stroke:#3f3,stroke-width:5px,color:#fff,stroke-dasharray: 5 5

%% classDef ofile fill:#03f,color:#f66

%% classDef compile color:red,stroke-dasharray: 9,5,stroke-dashoffset: 900,animation: dash 25s linear infinite;

classDef e_htosource stroke:#aaa,stroke-width:0.7x ,stroke-dasharray: 10,5;

classDef e_generate stroke:#f00,stroke-with:1px;

classDef e_compile stroke:#00f,stroke-width:1px;

classDef e_expand stroke:#3f3,stroke-width:3px;

classDef e_hidden stroke:#fff,stroke-width:1px;

%% class eg1,eg2,eg3,eg4,eg5,eg6,eg7,eg8,eg9,eg10,eg11,eg12,eg13,eg14,eg15,eg16,eg17,eg18,eg19,eg20 e_generated;

class json json;

%% mermaid-animate-tag languages_h

classDef generated fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef source fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef oexpanded fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef ofile fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef e_generated stroke:#eee;

classDef json fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

initial

Frame 2 / 3

---

title: null

animate: null

animate-yml-file: expand.yml

---

flowchart

greetings_yml([greetings.yml]):::source

greetings_json([greetings.json]):::json

main_c([main.c]):::source

subgraph expanded nodes

%%subgraph generated files

languages_h{{languages.h}}:::generated

english_h{{english.h}}:::generated

german_h{{german.h}}:::generated

french_h{{french.h}}:::generated

english_c{{english.c}}:::generated

german_c{{german.c}}:::generated

french_c{{french.c}}:::generated

%%end

%%subgraph expanded files

english_o{{english.o}}:::oexpanded

german_o{{german.o}}:::oexpanded

french_o{{french.o}}:::oexpanded

%%end

end

main_o{{main.o}}:::ofile

liblanguages_a{{liblanguages.a}}:::ofile

demo{{demo}}:::ofile

greetings_json eg1@--> english_h

greetings_json eg2@--> german_h

greetings_json eg3@--> french_h

greetings_json eg4@--> english_c

greetings_json eg5@--> german_c

greetings_json eg6@--> french_c

greetings_json eg7@--> languages_h

languages_h es8@--> main_o

english_h es1@--> english_o

english_h es2@--> main_o

german_h es3@--> german_o

german_h es4@--> main_o

french_h es5@--> french_o

french_h es6@--> main_o

%% languages_h es7@--> liblanguages_a

main_c ec1@--> main_o

%% languages_c ec2@--> languages_o

liblanguages_a ec3@--> demo

main_o ec4@--> demo

english_c ex20@--> english_o

german_c ex21@--> german_o

french_c ex22@--> french_o

english_o ex1@--> liblanguages_a

german_o ex2@--> liblanguages_a

french_o ex3@--> liblanguages_a

greetings_yml eg8@--> greetings_json

%% classDef source fill:#f96

%% classDef generated fill:#bbf,stroke:#3f3,stroke-width:5px,color:#fff,stroke-dasharray: 5 5

%% classDef oexpanded fill:#03f,stroke:#3f3,stroke-width:5px,color:#fff,stroke-dasharray: 5 5

%% classDef ofile fill:#03f,color:#f66

%% classDef compile color:red,stroke-dasharray: 9,5,stroke-dashoffset: 900,animation: dash 25s linear infinite;

classDef e_htosource stroke:#aaa,stroke-width:0.7x ,stroke-dasharray: 10,5;

classDef e_generate stroke:#f00,stroke-with:1px;

classDef e_compile stroke:#00f,stroke-width:1px;

classDef e_expand stroke:#3f3,stroke-width:3px;

classDef e_hidden stroke:#fff,stroke-width:1px;

%% class eg1,eg2,eg3,eg4,eg5,eg6,eg7,eg8,eg9,eg10,eg11,eg12,eg13,eg14,eg15,eg16,eg17,eg18,eg19,eg20 e_generated;

class json json;

%% mermaid-animate-tag languages_h

classDef generated fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef source fill:#6f6

classDef oexpanded fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef ofile fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef e_generated stroke:#eee;

classDef json fill:#6f6

known

Frame 3 / 3

---

title: null

animate: null

animate-yml-file: expand.yml

---

flowchart

greetings_yml([greetings.yml]):::source

greetings_json([greetings.json]):::json

main_c([main.c]):::source

subgraph expanded nodes

%%subgraph generated files

languages_h{{languages.h}}:::generated

english_h{{english.h}}:::generated

german_h{{german.h}}:::generated

french_h{{french.h}}:::generated

english_c{{english.c}}:::generated

german_c{{german.c}}:::generated

french_c{{french.c}}:::generated

%%end

%%subgraph expanded files

english_o{{english.o}}:::oexpanded

german_o{{german.o}}:::oexpanded

french_o{{french.o}}:::oexpanded

%%end

end

main_o{{main.o}}:::ofile

liblanguages_a{{liblanguages.a}}:::ofile

demo{{demo}}:::ofile

greetings_json eg1@--> english_h

greetings_json eg2@--> german_h

greetings_json eg3@--> french_h

greetings_json eg4@--> english_c

greetings_json eg5@--> german_c

greetings_json eg6@--> french_c

greetings_json eg7@--> languages_h

languages_h es8@--> main_o

english_h es1@--> english_o

english_h es2@--> main_o

german_h es3@--> german_o

german_h es4@--> main_o

french_h es5@--> french_o

french_h es6@--> main_o

%% languages_h es7@--> liblanguages_a

main_c ec1@--> main_o

%% languages_c ec2@--> languages_o

liblanguages_a ec3@--> demo

main_o ec4@--> demo

english_c ex20@--> english_o

german_c ex21@--> german_o

french_c ex22@--> french_o

english_o ex1@--> liblanguages_a

german_o ex2@--> liblanguages_a

french_o ex3@--> liblanguages_a

greetings_yml eg8@--> greetings_json

%% classDef source fill:#f96

%% classDef generated fill:#bbf,stroke:#3f3,stroke-width:5px,color:#fff,stroke-dasharray: 5 5

%% classDef oexpanded fill:#03f,stroke:#3f3,stroke-width:5px,color:#fff,stroke-dasharray: 5 5

%% classDef ofile fill:#03f,color:#f66

%% classDef compile color:red,stroke-dasharray: 9,5,stroke-dashoffset: 900,animation: dash 25s linear infinite;

classDef e_htosource stroke:#aaa,stroke-width:0.7x ,stroke-dasharray: 10,5;

classDef e_generate stroke:#f00,stroke-with:1px;

classDef e_compile stroke:#00f,stroke-width:1px;

classDef e_expand stroke:#3f3,stroke-width:3px;

classDef e_hidden stroke:#fff,stroke-width:1px;

%% class eg1,eg2,eg3,eg4,eg5,eg6,eg7,eg8,eg9,eg10,eg11,eg12,eg13,eg14,eg15,eg16,eg17,eg18,eg19,eg20 e_generated;

class json json;

%% mermaid-animate-tag languages_h

classDef generated fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef source fill:#6f6

classDef oexpanded fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef ofile fill:#fff,stroke:#fff,stroke-width:1px,color:#fff,stroke-dasharray: 5 5;

classDef e_generated stroke:red;

classDef json fill:#6f6